Abschlussarbeiten

Themenbereiche

Das Fachgebiet Service-centric Networking bietet Bachelor- und Masterarbeiten in verschiedenen Themenbereichen an:

Bitte beachten Sie!

Wenn Sie sich für einen Themenbereich interessieren, wenden Sie sich bitte an unsere Teamassistentinnen Andrea Hahn oder Sandra Wild (info@snet.tu-berlin.de) und fügen Sie Ihren Lebenslauf, eine Notenübersicht und einige grundlegende Hintergrundinformationen bei, wie z.B. Ihren Studiengang, Ihre Studienschwerpunkte und ganz wichtig: zwei oder drei Sätze darüber, welches Thema Sie generell interessieren würde.

Betreute Abschlussarbeiten

2024

| Name | Vorname | Bachelor - Masterarbeit | Titel der Arbeit | Betreuer |

| Appendino | Benjamin | MA | On-device Mobile Application Traffic Monitoring for iOS | Tom Cory |

| Eichenhofer | Jonathan | MA | Evaluating Monoliths and Microservices for migration to Serverless Architecture | Sanjeet Raj Pandey |

| Fisch | Maximilian | BA | A Deep Learning and NLP-Based Approach for Trace Forecasting in Predictive Process Mining | Wolf Rieder |

| Guo | Hongming | MA | Performance evaluation of trusted communication on QUIC and HTTP/3 protocol | Sanjeet Raj Pandey |

| Kahlert | Alwin | BA | Enabling Trusted Identifiers for Internet of Things | Aljoscha Schulte |

| Knothe | Adam | BA | A sensor and Wi-Fi based approach for indoor localization on smartphones | Christian René Sechting |

| Leonkov | Alex | BA | Privacy-Preserving Telemetry for Crowdsourced Network Traffic Monitoring Applications | Tom Cory |

| Mohona | Tanzira | MA | DIDComm V2 Implementation: A Pathway for Robust Off-chain Applications | Patrick Herbke |

| Natusch | Dennis | MA | Authentication in mTLS with Decentralized Identifiers and Verifiable Credentials | Sandro Rodriguez Garzon |

| Peppas | Dimitrios | MA | Enhancing Resource Modeling and Management in Hybrid Environments through edge-to-cloud simulation | Hai Dinh Tuan |

| Ruban | Anna | BA | Comparing stated and observed Privacy Practices of Mobile Applications | Tom Cory |

| Seita | Daria | MA | A QUIC Look at Mobile Privacy | Tom Cory |

| Zdanowski | Patrick | BA | Development of an interactive digital campus map with navigation functionality for mobile devices | Christian René Sechting |

2023

| Name | Vorname | Bachelor - Masterarbeit | Titel der Arbeit | Betreuer |

| Fu | Jianeng | MA | Secure messaging agent for 5G Core communication | Hai Dinh Tuan |

| Gharibnejad | Erfan | BA | Securing V2X communication and providing authenticity with DIDs and VCs | Artur Philipp |

| Heitkamp | Kristina | BA | On-device Modification of Mobile Application Security Configurations | Tom Cory |

| Huynh | Thu-My | MA | Design and Implementation of an Interactive Visualization Tool to Increase Transparency of Web Tracking | Philip Raschke |

| Jeong | Soo Min | MA | Matching Online and Offline Users for Hybrid Evaluation of Recommender Algorithms | Tobias Eichinger |

| Kahlert | Alwin | BA | Enabling Trusted Identifiers for Internet of Things | Aljoscha Schulte |

| Kelbel | Vincent | MA | Integration of SSI into the Blade IDM to Enable DIDComm-based Communication in Blade | Sebastian Göndör |

| Kengne Tene | Armand Borel | BA | Integration of Optimization Algorithms for Time Management of Course Preparations in Higher Education | Patrick Herbke |

| Lösche | Simon Luca | BA | Time-series Analysis of Android HTTP Traffic | Tom Cory |

| Mlaouhi | Alaa | BA | Comparison of state management solutions for serverless computing | Maria Mora Martinez |

| Orjuela Pico | Brayan Steven | MA | Decentralized Revocation of Verifiable Credentials | Patrick Herbke |

| Pevzner | Sarah Moriel | BA | DIDComm as Communication Protocol for Self-hosted Decentralized Service Federations | Sebastian Göndör |

| Pfoch | Linus | MA | Derivation of BPMN-based process models from SAP-based product structure data | Kai Grunert |

| Pham | Kevin Hai Nam | BA | Secure Web of Things Discovery with DIDs and VCs | Artur Philipp |

| Reich | Moritz | MA | A Plugin System for a Software as a Service Application based on the Example of PROCEED | Kai Grunert |

| Rieder | Wolf | MA | DIDComm as Communication Protocol for Self-hosted Decentralized Service Federations | Philip Raschke |

| Rohmann | Leon | BA | Towards a Modular Privacy Score Framework for Mobile Applications | Tom Cory |

| Rüger | Tom | MA | Realizing Polyglot Software Modules in Decentralized and Extensible Service Architectures | Sebastian Göndör |

| Schmolenski | Niklas | BA | Detecting latent confounders in purely observational data | Boris Lorbeer |

| Sharma | Ankush | BA | Location-aware Serverless Function Placement Approach in an Edge Environment | Maria Mora Martinez |

| Shawarba | Naseem | BA | A Policy Editor for the JSON-Serialization of Privacy Policies Written in Natural Language | Tobias Eichinger |

| Sindermann | Jean-Pascal | BA | SSI Profile: Using DIDs and VCs for W3C Web of Things (WoT) Thing Description authenticity | Artur Philipp |

| Six | Florian | BA | Adaptive Service Placement based on application level information | Hai Dinh Tuan |

| Stender | Nick Jörn | MA | Verifiable Credentials for Network Access Control | Artur Philipp |

| Westlinning | Steffen | MA | Optimizing Cold Start Latency in Serverless using Function Discovery | Sanjeet Raj Pandey |

| Wilhelm | Mai Khanh Isabelle | MA | Creation of a Middleware for Multi-Vendor Communication with Mobile Robots | Kai Grunert |

| Wittig | Luisa | BA | Performance Analysis of Process Diagrams within the PROCEED Business Process Management System | Kai Grunert |

| Zountsas | Georgios | BA | A platform for automated summaries generation for medical articles | Aikaterini Katsarou |

2022

| Name | Vorname | Bachelor - Masterarbeit | Titel der Arbeit | Betreuer |

| Abdelkhalek | Yousef | MA | Incorporating OCSP Stapling in EDHOC for Certificate Revocation in Resource Constrained Environments | Aljoscha Schulte |

| Akimov | Grigori | MA | Detecting Liquidity Draining “Rug-Pull” Patterns in CPMM Cryptocurrency Exchanges | Friedhelm Victor |

| Alissa | Fadel | BA | Cross-Browser Comparison of Web Tracker Activity Using T.EX | Philip Raschke |

| Baumann | Florian | BA | A Coarse Location-Service for Collaborating with Approximately Nearest Neighbors | Tobias Eichinger |

| Barkemeyer | David | BA | Implementing an NDNCERT Challenge based on Verifiable Credentials | Aljoscha Schulte |

| Barman | Kaustabh | MA | Managing Higher Education Certificates using Self-Sovereign Identity Paradigm | Patrick Herbke |

| Chada | Wepan | MA | Understanding Adherence to Ecological Momentary Assessments in the Example of the TYDR App | Aikaterini Katsarou |

| Colak | Cihad | BA | ML-based Tracker Detection in Android Applications | Tom Cory |

| Dhakal | Uttam | MA | Abstractive text summarization of scientific articles from Bio - medical domain | Aikaterini Katsarou |

| Dungs | Imke | BA | Creation of BPMN Processes with a Smart Voice Assistant | Kai Grunert |

| Douss | Nabil | MA | Multi-domain Sentiment Analysis with an Active learning Mechanism | Aikaterini Katsarou |

| Frech | Berit | MA | MOBIDID - Decentralized Mobile Messaging using DIDComm | Hakan Yildiz |

| George | Lukas | BA | STARK-based Chain Relays | Martin Westerkamp |

| Hibatullah | Rayhan Naufal | BA | State management in 5G using Akka Serverless | Maria Mora Martinez |

| Hofmann | Pascal | BA | Analysis and Implementation of Secure Key Management in Mobile Wallet Applications | Sebastian Göndör |

| Hrustic | Amira | BA | Analysing Web Tracking in Mobile Android HTTP Traffic | Tom Cory |

| Isaias Sanchez Figueroa | Adrian | BA | Integrating DIDComm Messaging in ActivityPub-based Social Networks | Sebastian Göndör |

| Jie | Anna | MA | An Intelligent Decision Support System for Test Optimization Purposes | Aikaterini Katsarou |

| Joderi-Shoferi | Janis | BA | Adaptive Processes in a decentralized Business Process Management System | Kai Grunert |

| Kalz | Andrea | MA | Synergies between Verifiable Credentials and Information-Centric networks on the example of the Named Data Networking Project | Aljoscha Schulte |

| Keller | Laura | BA | Analysing the Effect of Android Permissions on Mobile Tracking | Tom Cory |

| Kmit | Anastasia | BA | Machine Learning-supported Analysis of Mobile Application Traffic | Tom Cory |

| Krause | Jonas | BA | Managing Higher Education Certificates using Self-Sovereign Identity Paradigm | Patrick Herbke |

| Ksoll | Maximilian | MA | Challenges of implementing microservices as serverless functions | Maria Mora Martinez |

| Kutal | Volkan | BA | Mobile Traffic Data Visualization for Web Tracker Detection | Tom Cory, Philip Raschke |

| Lamichane | Ananta | MA | A Hybrid Evaluation Scheme for Making Qualitative Feedback Available to Recommender Systems Researchers | Tobias Eichinger |

| Liu | Liming | MA | Coordinated Resolution of Compute Request in the Compute-centric Networks | Hai Dinh Tuan |

| Lösche | Simon | BA | Time-series Analysis of Android HTTP Traffic | Tom Cory |

| Lukyanovich | Nastassia | BA | Visualising Mobile Web Traffic Characteristics with an Interactive Dashboard | Tom Cory |

| Matini | Shirkouh | MA | Cryptocurrency volatility prediction using sentiment analysis from social media | Aikaterini Katsarou |

| Mohsen | Mustafa Ismail | BA | Comparative study of causal discovery methods | Boris Lorbeer |

| Nawaz | Hafiz Umar | MA | State persistance evaluation for the stateful serverless platforms | Maria Mora Martinez |

| Odorfer | Roland | MA | Decentralized Identity Management and its Application in Future Cellular Networks | Sandro Rodriguez Garzon |

| Oppermann | Laura | MA | Concept and Design of an Efficient Search and Discovery Mechanism for Decentralized Ledger-based Marketplaces | Sebastian Göndör |

| Rau | Jonathan | MA | Distributed Ledgers as Shared Audit Trails for Carbon Removal | Marcel Müller, Robin Clemens |

| Rhimi | Radhouane | BA | Platform for crowdsourcing hate speech | Aikaterini Katsarou |

| Rucaj | Denisa | MA | Feature-based Extractive Multi-document Summarisation | Bianca Lüders, Aikaterini Katsarou |

| Saadi | Juba | MA | Decentralized Scoring for Adjusting Publication Reach on Online Social Networks | Sebastian Göndör |

| Schulenberg | Emilia | BA | Design and Implementation of a Cloud Wallet for Self-hosted Decentralized Services | Sebastian Göndör |

| Schwerdtner | Henry | BA | Identifying Structural Web Tracker Characteristics With Real-Time Graph Analysis | Philip Raschke |

| Sivirina | Anastasiia | MA | Enabling Verifiable Credentials Interoperability with the Enhancement of the ACAPY Framework | Hakan Yildiz |

| Skodzik | Melanie | MA | Analyzing Market Manipulation on Automated Market Maker based Decentralized Cryptocurrency Exchanges | Friedhelm Victor |

| Song | Yong Huyn | BA | A Comparison of Web Tracking and its Mobile Counterpart | Tom Cory |

| Tsaplina | Olesia | BA | Datenschutz in dezentralen sozialen Netzwerkplattformen: Entwicklung von einem Dashboard zum verteilten Datenschutzmanagement | Philip Raschek, Sebastian Göndör |

| Urban | Tobias | BA | Analyse der Evolution von Featuresets sozialer Netzwerkplattformen | Sebastian Göndör |

| Wang | Mingzhi | MA | MATSim-based Data Diffusion Models for Dissemination-based Collaborative Filtering | Tobias Eichinger |

2021

| Name | Vorname | Bachelor - Masterarbeit | Titel der Arbeit | Betreuer |

| Akimov | Grigori | MA | Detecting Liquidity Draining “Rug-Pull” Patterns in CPMM Cryptocurrency Exchanges | Friedhelm Victor |

| Ebermann | Marcel | BA | On the Accuracy of Block Timestamp-based Time-sensitive Smart Contracts on Private Permissioned Ethereum Blockchains | Tobias Eichinger |

| Fan | Yuanzhang | MA | Optimizing content dissemination in federated online social networks | Sebastian Göndör |

| Herbke | Patrick | MA | Detection of Web Tracker Characteristics with Graph Analysis Methods | Philp Raschke |

| Hertwig | Kevin | BA | Development of an Identity and Access Management for a decentralized Business Process Management System | Kai Grunert |

| Hrustic | Amira | BA | Analysing Web Tracking in Mobile Android HTTP Traffic | Thomas Cory |

| Jeney | Roxana | MA | Multi-Domain Sentiment Classification using an LSTM-based Framework with Attention Mechanism | Aikaterini Katsarou |

| Kutal | Volkan | BA | Mobile Traffic Data Visualization for Web Tracker Detection | Tom Cory, Philip Raschke |

| Lang | Carolin Sophie | BA | Mapping Company Information to Web Domains for Enhanced User Transparency | Philip Raschke |

| Li | Ziyang | BA | Best Practices for using JavaScript on Resource-Constrained Microcontrollers -> geändert: Memory Consumption Analysis for JavaScript Engines on Microcontrollers | Kai Grunert |

| Mohamed | Gehad Gamal Salem Awad | BA | Intercepting and Monitoring TLS Traffic in Mobile Applications | Thomas Cory |

| Nawaz | Hafiz Umar | MA | State persistance evaluation for the stateful serverless platforms | Maria Mora - Martinez |

| Pandey | Sanjeet Raj | MA | Smart function placement for serverless applications | Maria Mora - Martinez |

| Pelz | Konstantin | BA | A Context-aware Mobile App to Compute Location- and Air Pollution-based Emission Compensations for Car Rides | Sandro Rodriguez Garzon |

| Peppas | Dimitrios | BA | Design and Implementation of a Mobile Sensing App for Experience Sampling | Felix Beierle |

| Rieder | Wolf Siegfried | BA | On The Usefulness of HTTP Responses to Identify Differences Between Non- And Web Trackers | Philip Raschke |

| Rucaj | Denisa | MA | Feature-based Extractive Multi-document Summarisation | Bianca Lüders, Katerina Katsarou |

| Ryu | Youngrak | MA | Data storage in DHTs: A Framework for storing larger data in Kademlia | Martin Westerkamp, Dirk Thatmann |

| Sarder | Uma | MA | Design and Implementation of Control Flow and Permission Management for Polyglot Distributed Service Modules in the Blade Ecosystem | Sebastian Göndör |

| Schneider | Maximilian | BA | A Web Service to Enable the Computation of Dynamic Air Pollution-aware Road User Charges on Mobile Devices | Sandro Rodriguez Garzon |

| Stumpf | Julien | BA | Using Ad Blocking Filter Lists for Automated Labeling of Web Tracker Traffic | Philip Raschke |

| Syed Qasim | Hussain | MA | Blockchain-based Trusted Execution Environments for Privacy-preserving Medical Research | Marcel Müller |

| Yang | Huaning | BA | How Representative Is Measured Network Traffic: Individual Browsing Behavior And Its Technical Manifestation | Philip Raschke |

Graduate Seminar

Das Seminar ist ein Forum für wissenschaftliche Diskussionen und aktiven Austausch. Studierende haben die Möglichkeit, ihre Abschlussarbeiten unter Kommilitonen:innen, Absolventen:innen, den Mitarbeitenden des Fachgebietes und dem Professor zu diskutieren. In der frühen Phase ihrer Arbeit wird ihr Thesenansatz diskutiert, während am Ende die Ergebnisse präsentiert werden. Studierende, die derzeit an unserem Lehrstuhl an ihrer Abschlussarbeit arbeiten, sind verpflichtet, an allen Terminen teilzunehmen, insbesondere wenn andere verwandte Themen präsentiert werden. Wir freuen uns auch über weitere Studierende, die sich für das Seminar interessieren oder zukünftig eine Abschlussarbeit an unserem Lehrstuhl schreiben werden.

Bitte beachten Sie:

Studierende, die sich für eine Abschlussarbeit an unserem Lehrstuhl entscheiden, müssen in einer ersten Präsentation in der Anfangsphase ihrer Abschlussarbeit ihr Thema und den Ansatz, den sie verfolgen werden, vorstellen. Diese Präsentation sollte 10 Minuten dauern, wobei anschließend 5 Minuten für Fragen und Antworten zur Verfügung stehen.

Nach Abschluss der Arbeit müssen die Studierenden diese in einem Vortrag verteidigen, in dem sie die in der Arbeit erzielten Ergebnisse darlegen. Bachelor-Studierende sollten 15 Minuten präsentieren und 5 Minuten für Fragen und Antworten einplanen, während Master-Studierende 20 Minuten präsentieren und anschließend 10 Minuten lang auf Fragen und Antworten eingehen.

Termine geplante Graduate Seminare

2024

24. April 2024 (Online Meeting)

| Titel | Präsentation | Studierende | Uhrzeit | Betreuer |

| Time Series Analysis in Process Mining | Bachelor Initial | Lukas Rohana | 14.15 | Wolf Rieder |

| Reinventing Supply Chains Through Escrow Smart Contracts | Bachelor Initial | Elias Safo | 14.35 | Kaustabh Barman |

| Chained Verifiable credentials as verifiable receipts in supply chain | Bachelor Initial | Luca Janssen | 14.55 | Kaustabh Barman |

| Generation of BPMN Diagram using Large Language Models | Bachelor Initial | Marten Kant | 15.15 | Kai Grunert |

| Decentralized Revocation of Verifiable Credentials | Master Defense | Brayan Steven Orjuela Pico | 15.35 | Patrick Herbke |

03. April 2024

| Titel | Präsentation | Studierende | Uhrzeit | Betreuer |

| Design and Implementation of a Persistence Layer on the Example of the Cloud Application PROCEED | Bachelor Initial | Anish Sapkota | 14.15 | Kai Grunert |

| Development of an interactive digital campus map with navigation functionality for mobile devices | Bachelor Defense | Patrick Zdanowski | 14.35 | Christian René Sechting |

| A sensor and Wi-Fi based approach for indoor localization on smartphones | Bachelor Defense | Adam Knothe | 15.05 | Christian René Sechting |

| Decentralized Revocation of Verifiable Credentials | Master Defense | Brayan Steven Orjuela Pico | 15.35 | Patrick Herbke |

| Dynamic Cookie-Banner Generation | Bachelor Initial | Nazim Yolcu | 16.15 | Philip Raschke |

| Decentralized Credential Status Management with Bloom and Cuckoo Filter: A Performance Comparison in Hyperledger Fabric | Bachelor Initial | Ali Mohammadi | 16.35 | Patrick Herbke |

06. März 2024

| Titel | Präsentation | Studierende | Uhrzeit | Betreuer |

| Privacy-Preserving Telemetry for Crowdsourced Network Traffic Monitoring Applications | Bachelor Defense | Alex Leonkov | 14.15 | Tom Cory |

| Using Graph Neural Networks for Web Tracker Detection | Bachelor Initial | Kai Stross | 14.45 | Wolf Rieder |

| Empowering Off-Chain Applications through the Implementation of DIDComm V2 | Bachelor Initial | Tanzira Mohana | 15.05 | Patrick Herbke |

| Time Series Analysis in Process Mining | Bachelor Initial | Lukas Rohana | 15.25 | Wolf Rieder |

| RPA with LLMs | Master Initial | Nick Reiter | 15.45 | Wolf Rieder |

07. Februar 2024

| Titel | Präsentation | Studierende | Uhrzeit | Betreuer |

| A Plugin System for a Software as a Service Application based on the Example of PROCEED | Master Defense | Moritz Reich | 14.15 | Kai Grunert |

| A Deep Learning and NLP-Based Approach for Trace Forecasting in Predictive Process Mining | Bachelor Defense | Maximilian Oliver Fisch | 14.55 | Wolf Rieder |

| Deployment Strategies in the Cloud-Edge Continuum: Is Unikernel Container 2.0? | Master Initial | Luis Borges | 15.25 | Hai Dinh Tuan |

| Towards a Modular Privacy Score Framework for Mobile Applications | Bachelor Defense | Leon Rohmann | 15.45 | Tom Cory |

| Comparing stated and observed Privacy Practices of Mobile Applications | Bachelor Defense | Anna Ruban | 16.15 | Tom Cory |

| Integration of Optimization Algorithms for Time Management of Course Preparations in Higher Education | Bachelor Initial | Armand Borel Kengne Tene | 16.45 | Patrick Herbke |

10. Januar 2024

| Titel | Präsentation | Studierende | Uhrzeit | Betreuer |

| Verifiable Credentials for Network Access Control | Master Defense | Nick Jörn Stender | 14.15 | Artur Philipp |

| Forensic Checkpointing for microservice portability | Master Initial | Jialun Jiang | 14.55 | Hai Dinh Tuan |

| Beyond Traditional Algorithms: How Large Language Models are Transforming Process Discovery in Process Mining | Master Initial | Heyi Li | 15.15 | Wolf Rieder |

| Token flow analysis for process mining on blockchain data | Master Initial | Tom Funke | 15.35 | Richard Hobeck |

FAQ

In diesem Abschnitt werden allgemeine Themen und häufig gestellte Fragen zum organisatorischen Ablauf von Bachelor-, Master- und Diplomarbeiten an unserem Lehrstuhl behandelt. Bitte lesen Sie diese Seite sorgfältig durch, bevor Sie sich an einen der Betreuer wenden, damit Sie gut vorbereitet sind, wenn Sie Ihr Interesse bekunden und die Themen mit den Betreuern besprechen.

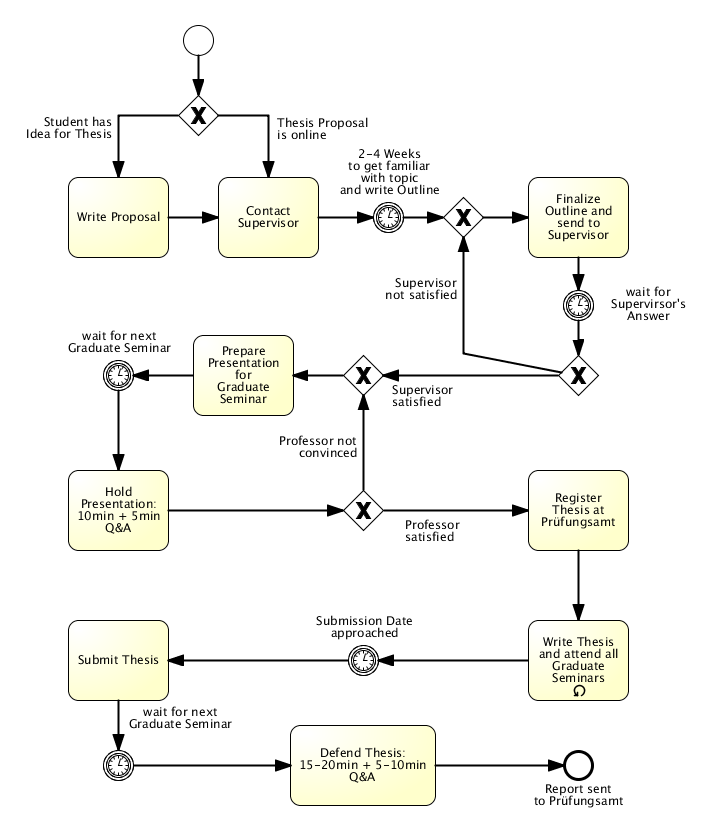

Arbeitsablauf

Der organisatorische Ablauf einer Bachelor-, Master- oder Diplomarbeit bei SNET wird im Folgenden beschrieben:

- Nehmen Sie Kontakt mit dem Sekretariat des Fachgebietes auf. Das Sekretariat prüft mit dem Team die eventuell bestehenden Möglichkeiten der Betreuung.

- Sie erhalten eine Rückmeldung von einem unserer Mitarbeiter bzw. vom Sekretariat.

- So bald der Kontakt mit einem möglichen Betreuenden hergestellt werden konnte, besprechen Sie mit ihm das gewünschte Thema.

- Sie haben 2-4 Wochen Zeit zur Überlegung und Einarbeitung in das Thema.

- Wenn Sie sich für dieses Thema entscheiden, erwarten wir, dass Sie in diesen 2-4 Wochen eine Gliederung Ihrer Arbeit schreiben, die wir bewerten werden. Diese Gliederung hilft Ihnen und uns, die Arbeit zu klären, die im Rahmen Ihres Themas geleistet werden muss.

- Im nächsten Graduierten-Seminar müssen Sie eine erste Präsentation Ihrer Arbeit halten: 10 Minuten + 5 Minuten Frage und Antwort. Wenn Sie das endgültige OK vom Professor erhalten, können Sie zu Schritt 5 übergehen.

- Melden Sie Ihr Thema beim Prüfungsamt an; von nun an ist die Teilnahme am Graduierten-Seminar verpflichtend (Ihre E-Mail-Adresse wird in eine Mailingliste für Einladungen aufgenommen, bitte schauen Sie auch regelmäßig auf unserer Webseite nach).

- Während der Arbeit können mehrere Graduierten-Seminare und Treffen mit Ihrem Betreuer stattfinden.

- Einreichung der BA/ MA Arbeit; jetzt ist die Teilnahme am Graduierten-Seminar nicht mehr verpflichtend, außer für die Verteidigung der Arbeit. Bachelor-Studenten halten einen 15-minütigen Vortrag + 5 Minuten Frage und Antwort, während Master-Studenten 20 Minuten + 10 Minuten Frage und Antwort halten.

- Nach der Verteidigung wird ein Bericht über die Abschlussarbeit mit der Note an das Prüfungsamt geschickt.

Bitte beachten Sie, dass die Abgabe spätestens 6 Wochen vor Ende des Semesters erfolgen muss, um zu gewährleisten, dass Sie Ihre Note noch im selben Semester erhalten. Daher muss die Anmeldung der Arbeit beim Prüfungsamt mindestens 4 Monate + 6 Wochen für Bachelorarbeiten und 6 Monate + 6 Wochen für Masterarbeiten vor der Abgabe erfolgen.

Bitte beachten Sie:

Bitte beachten Sie bei der Anfertigung einer Abschlussarbeit die folgenden Hinweise:

- Bitte prüfen Sie die APO der Fakultät IV, insbesondere § 13 (Abschlussarbeiten)

- Bitte schreiben Sie Ihre Abschlussarbeit auf Deutsch (BA)/ Englisch (BA/ MA)

- Bei der Erstellung von Bachelorarbeiten sollte der Umfang ca. 30-50 und bei Masterarbeiten ca. 50-90 Seiten betragen

- Das Abstract muss zweimal verfasst werden, in Deutsch und Englisch

- Bitte verwenden Sie die LaTeX-Vorlage des Lehrstuhls

- Kopieren Sie keine Textpassagen, ohne den Autor zu zitieren (Plagiarismus)